What you should know #

I initially created this cheatsheet guide as a personal reference while preparing for the OCI DevOps Professional exam. However i think that more people might benefit and reference this guide before and/or after taking the exam.

I would like to point out that i am personally preparing for the exam with the help of Oracle Learning Path exclusively, and i am suggesting for you to take the time and the effort to enrol and complete the full preparation path created by the experts at Oracle Learning, in order to leverage the full dimension knowledge of the specific topic.

Exam Topics #

- Configuration management and Infrastructure as Code (OCI Ansible – OCI Terraform)

- Microservices and Container Orchestration, OCIR (Oracle Cloud Image Registry), OKE (Oracle Kubernetes Enginer)

- Continuous Integration & Continuous Delivery (CI/CD)

- DevSecOps

- Observability Services

Please note that this is only a cheatsheet. There are no examples or hands on provided in this guide.

Oracle Learning Path provides an amazing hand-on workshop which helps you master all the topics mentioned above.

What DevOps means? #

DevOps is a way of working that allows continuous delivery of high quality software.

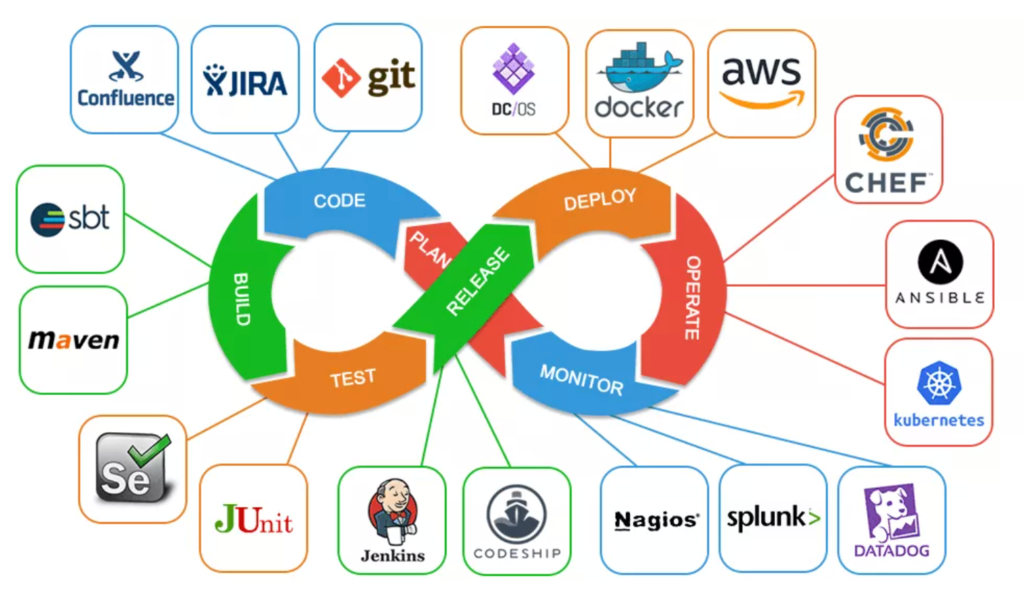

Here you can see some of the tools used by a DevOps engineer in order to accomplish each phase of the DevOps life cycle.

Configuration Management (CM) & Infrastructure as Code (IaC) #

| Ansible (CM) | Terraform (IaC) |

| Support mutable infrastructure. That means that it can update an existing component. | Supports immutable infrastructure. That means that it will destroy and rebuild a component. |

| Works via SSH | Works via API calls (of the cloud provider) |

How Ansible works? #

- Ansible follows Agentless Architecture. This means that it doesnt need an agent to be running on each of the nodes that Ansible will perform automations. Instead, Ansible uses ssh to connect into each target machine and manage them, from a single control machine.

- Ansible can execute a variety of ad hoc commands, initiated from a control machine. For example we can run one command to update nginx, and the control will execute this command across the entire target fleet. Ad hoc commands are great for one off tasks, but for reusability we will use Playbooks, which will discuss next. Following an example of an ad hoc command:

ansible webservers -m shell "vi /home/opc/app.py"- Ansible uses small modules called Playbooks, which can group a set of commands.

- The group of nodes which Ansible will apply the Playbooks against, is called Inventory.

Ansible with OCI #

- First, we need to specify all the hosts that we want to manipulate, in hosts file.

# hosts.yaml

all:

hosts:

129.156.12.49:

children:

webservers:

hosts:

129.134.23.44:

dbserver:

hosts:

123.34.456.34:- While in Cloud Shell, in the burger menu of the Cloud Shell, click “Upload File”, and upload the file that we created

- Run ad-hoc commands with ansible from the Cloud Shell

ansible all -m ping -i ~/hosts.yaml -u opcTerraform Intro #

With Terraform, we can provision Infrastructure from various Cloud Providers, including OCI, by using the declarative terraform code, which is used by the terraform engine in order to create, modify or destroy cloud components.

For example, we can use this terraform code block, in order to provision a OCI VCN:

resource "oci_core_vcn" "test_vcn" {

compartment_id = var.compartment_id

cidr_block = var.vcn_cidr_block

defined_tags = {"Operations.CostCenter"= "42"}

display_name = var.vcn_display_name

}You can find a complete Terraform OCI Infrastructure provisioning code in this tutorial.

OCI Resource Manager #

When we run terraform apply, terraform will call terraform refresh and the terraform state will be updated and the terraform state is used in order to generate the terraform diff. Terraform diff is used by terraform apply in order to make changes to the infrastructure.

When working in teams, we need to make sure that the state file is sync across all the engineers, in order to have a successful infrastructure provisioned.

We could use a centralised version control like git in order to save the tfstate in a centralised manner, we cannot actually do that since it can container sensitive information.

So how can we make sure that all the engineers have the tfstate file synced?

This is where OCI Resource Manager comes into play. With OCI RM we can link the terraform code from a git repo, but performing terraform plan and apply on the cloud. This makes sure that the tfstate will be in sync no matter what.

Creating a Resource Manager Stack #

A stack is a set of resources to manage infrastructure. We have various option to mirror a stack, but in this example we will use a git repo.

- From the Burger menu head over to Developer Services and then Resource Manager

- Select Stacks and click on Create Stack button

- Select the Source Code Control System radio button

- Select the Configuration Source Provider (A connection to the gitlab or github repo)

- Select the Terraform code repository

- Copy and Paste the compartment id

- We can override the region variable form the OCI Console

Resource Manager Actions #

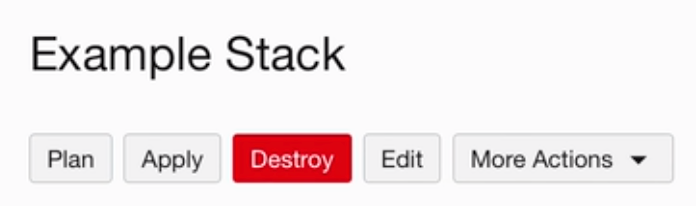

We can now run Plan and Apply from the Console, and this way we make sure that the state is always synced and up to date.

Syncing Resource Manager #

We assume that all the changes in our tenancy will be done through terraform. But that is not that realistic, since a user can manually make changes through the console in our resources.

What drift means? #

Drift is when the infrastructure is slowing changing from what it was configured. For example adding a new CIDR Block manually from the OCI Console.

With Resource Manager, we can actually run Drift Reports to generate detailed drift changes for each resource.

From the Resource Manager console, we can click in the More Actions button, and then Drift Report.

Resource Discovery #

We have the option to first mess around in the OCI Console, creating the infrastructure manually, and then create a Resource Manager Stack based on that infrastructure.

To create a Stack from an existing compartment,

- Follow the steps from the “Creating a Resource Manager Stack” section but instead of choosing Source Code Control System, select Existing Compartment.

- In the Terraform Provider Services section select the “Core” services only

Extending the OCI Console #

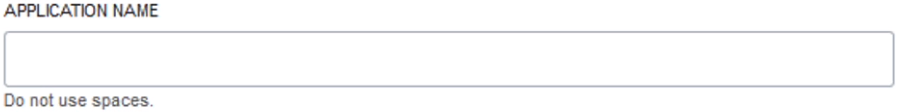

Instead of hardcoding the different OCI Region specific options like Instance Shape, the name of the Instance or the CIDR Blocks value inside the Terraform configuration code, we can actually extend the OCI Console by adding a schema.yaml file. This yaml file will contain a bunch of variables, which will be rendered as UI component when creating a Stack in the Resource Manager.

For example, the following yaml block:

variables:

functions_app_name:

type: string

title: "Application Name"

description: "Please do not use spaces"Will render in the Stack Creation Page like this:

You can see more example of how to create templates based on schemas based on this github example

Container Management #

OCIR Introduction

It is a highly available, public or private docker registry, running as a fully managed service on OCI.

Terminology #

Region key: us-phoenix-1.ocir.io

Repository name: project01/foo-bar-repo

Tenancy namespace: For example the namespace of the foo-bar tenancy can be dkghasww1

Registry identifier: <region-key>.ocir.io/<tenancy-namespace>

Image path: <region-key>.ocir.io.<tenancy-namespace>/<repo-name>:<tag>

Managing OCIR #

Creating a registry #

In your console, from the hamburger menu, browse to Developer Service and and then Container Registry.

Select the Compartment in which you wish to create the the Registry, and press the Create Repository button.

Push an Image to OCIR #

- We first need to generate an access token.

From the Profile menu, in User Settings and in the Resources tab click on the Auth Tokens. - The next step is to login to the OCIR.

docker login <region-key>.ocir.io //Use the generated auth token as a password- Then, we need to tag our image

docker tag my-demo-app:v1 <region-key>.ocir.io/<compartment>/<repository>:v1- push the full image path to the OCIR

DevOps with Managed Kubernetes #

Node

Node is the smallest unit of computing hardware in Kubernetes. It is encapsulating one or more applications as containers.

Pods

Pods encapsulate a single docker container and has a private IP. The pod runs in a Node, but it is very easy to transfer it to another Node.

Service

It is a separate component that enables network access to a pod. Even if a pod dies tho, the Service still remains alive and when a new pod is spinning up we do not need to change the IP address to access the underlying container running in the pod.

Ingress

It is an API that allows access to the Kubernetes service outside the cluster. Meaning we can have routing rules with Ingress, to manage the traffic.

Deployment

With Deployments we can specify the lifecycle of our application, and it will automatically manage the pods, scale or rollback our deployment.

ConfigMap

Stores connection strings, env variables, hostnames etc.

Secrets

Stores sensitive data like passwords, SSH keys etc.

Volumes

Kubernetes Volumes gives the container inside a pod the ability to access a directories and files.

StatefulSet

Unlike Deployments, it maintains sticky identity for pods that needs to persist data

Kubelet

It provides node-level pod management.

Kube-proxy

Is a networking proxy and a load balancer that allows communication between the pods and the APIserver.

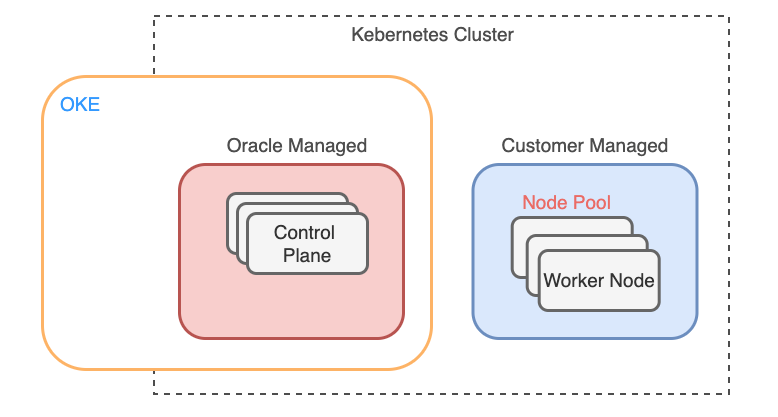

OKE

It is fully managed Kubernetes environment by Oracle Cloud. It is using the Kubernetes open source tool. The only thing that the customer need to manage is the worker nodes and their configuration.

Kubectl tool

Lets us perform operations upon clusters. We can use a local kubectl installation or the OCI console. To access a private Kubernetes cluster we need to configure a bastion first.

CI/CD #

In this module we will talk about DevOps Project OCI Service.

The first thing we need to configure to work with DevOps Service is to set IAM Policies. First we need to create group with users and let groups manage the DevOps Service. Then we need to create Dynamic Groups and let DEvOps resources to interact with other OCI resources.

Next we will talk about various resources provided by DevOps Projects.

Get started

First you need to create a new DevOps project from the console. Then, configure SSH authentication token.

Code Repository

We can create a new code repository, transfer an existing project from GitHub or any other provider or mirror an existing repo in the DevOps Project Code Repository.

Important files:

- build_spec.yaml: This file is important for pipeline to build code and is a set of instructions. We can have env variables, steps to run in sequence and many more functionality. We can use as input artifacts (code to be built) within the DevOps Code Repo or any external code repo. The Output artifact can be a compute image, a Kubernetes manifest. an artifact or a deployment configuration.

To mirror an existing git repo with DevOps Code Repo, we need to:

- Create an access token in the git provider

- Create a Vault Secret for the access token

- Create Dynamic Group & IAM Policy for DevOps Project to access vault secrets in the compartment

- Mirror code repository through the DevOps Service

Artifacts / Container Registry

Software libraries and packages that are used to deploy applications. We can create a maximum of 500 repositories with total size of 500GM per tenancy. We are not obligated to use OCI DevOps Artifacts Service and we can use any other external Artifact provider.

Environments

Environments are collection of resources where our artifacts are deployed.

Build Pipelines (CI)

CI provides code creation, commit , test and versioning. Build specification trigger tests and generate desired artifacts. We create different stages for the pipeline and then specify them in the build_spec.yaml file.

Deployment Pipeline

We can choose the Deployment Pipeline in order to perform automatic or manual deployments to staging or production environments, run canary and smoke tests before the release.

We need to configure the Deployment Environments (OKE, VM etc), Control (manual or automatic deployment) and Integration (Functions to run some custom deployment logic) stages.

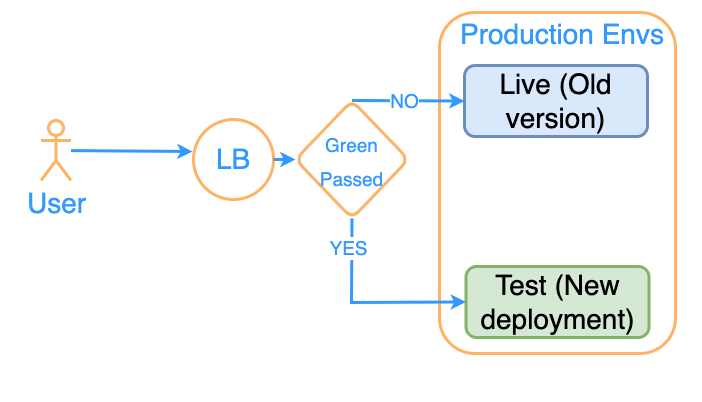

Deployment Strategy – Blue Green

Deploy the new version of the application in the Green production env. If the new deployment is running as expected, redirect the LB traffic to the Green deployment, if not, continue on the Blue. When traffic flows to Green, apply the new deployment to the Blue as well.

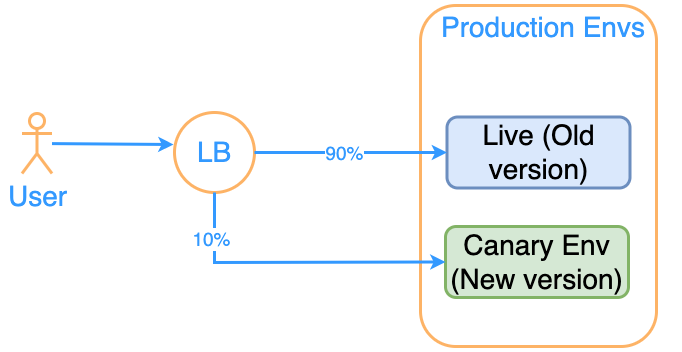

Deployment Strategy – Canary

First, the new version is released in the Canary Environment. Then. slowly a small amount of user traffic is routed to the Canary env, increasing the traffic percentage incrementally. Finally. the traffic redirected to Canary is 100%.

Helm

A typical application deployed with Kubernetes, will have objects like Service, Secrets, Deployment and many other, and which has its own configuration.yml file. The same application needs to be deployed in multiple environments and all these .yml configuration files must come with each environment specifications. It can get very tedious to deploy the application in each environment. HELM comes to solve this problem. Helm can define, install and upgrade even the most complicated Kubernetes deployments. It is like having pip for python or yum for CentOS.

Heml Chart: It contains all the resource definition in yml format. It consists of templates which hold information about deployment (Deployment.yml), and what application they should install (Service.yml).

Helm Release: It is an instance of Helm Chat running in Kubernetes. Lets say we have 2 HTML websites, and we want to run 2 instances of the site, what we would do is to run the same chart 2 times and 2 releases would be created.

Chart Repository: A place to store and share Helm Charts.

OCI DevOps support for external tools

- Chef knife. Knife-OCI lets user interact with OCI resources with Chef

- Grafana. Makes queries to OCI monitoring and displays them on grafana

- Ansible Collection. Provision resources in OCI though Ansible

- OCI DevOps plugin for Jenkins

- Terraform provider

DevSecOps #

OCI Vault

Basics of OCI Vault service can be found of this section of the mentioned article.

OKE Security

IAM & RBAC

We can control permission for accessing and manage OKE services by using IAM Policies, but also using RBAC (Role Based Access Control) the Kubernetes built-in authorisation system for cluster management tasks which can provide additional fine-grained access control and be integrated with IAM groups.

Kubernetes Secrets

Kubernetes keeps sensitive data like tokens or keys in the etcd (an open source key-value store). The master encryption key is managed by Kubernetes, but we can choose to manage it ourselves by providing the key and controlling when the key is rotated with the help of OCI Vault.

Cluster Security

We can use pod security policies to control to limit storage choices, restrict ports and many more.

Multi tenant considerations

namespace-as-a-service model: tenants share a cluster and each application workloads are assigned to one or more namespaces which each one of them has separate role bindings, network policies and resource quotas. The cluster control plane are available for use across all tenants. With namespaces we achieve isolation and we has virtual clusters. This is useful when we need multiple applications running on the same cluster for testing proposes for example.

cluster-as-a-service model: Tenants get separate clusters and have full control of cluster resources. This is better for production environments where we one to have one component of our application running in one only cluster.

Image Security

To make sure that the images are not hijacked and we use secure versions of software images, we can use vault keys to sign the images. We can sign the images after they build when the image is pushed.

Oracle Functions Security

First and foremost we need to configure the IAM policies for user groups authorisation for oracle functions.

Another thing that need to be configured is restricting network access for function invocation, and keep the applications that are running inside the function in a private subnet.

Observability #

Monitoring

Some DevOps metrics that can be found in the monitoring service:

oci_devops_code_repos, oci_devops_build, oci_devops_deployment.

Notifications

Logging

Events

Events are the result of an action that is happening in an OCI service. For example when a developer push code to the DevOps Code Repo service, an event is generated and we can catch this event with the Event Service and perform actions upon it, like sending an email or trigger an Oracle Function.